Extracting data from websites (web scraping) is a useful technique to automate the collection of information from web pages and import it into Excel. This article provides a comprehensive guide to using VBA in Excel for web scraping, including a complete script, explanation, step-by-step instructions, and key considerations.

Nội dung

1. VBA Code for Extracting Data

Below is the complete VBA code to scrape URLs and article titles from a multi-page category on a website:

Sub ScrapeMultiplePages()

Dim ie As Object ' Internet Explorer object

Dim doc As Object ' HTMLDocument object

Dim links As Object ' Collection of links

Dim link As Object ' Individual link

Dim baseUrl As String ' Base URL

Dim page As Integer ' Page counter

Dim i As Long ' Row counter

' Base URL of the category (replace {PAGE} with the page number)

baseUrl = "http://example.com/category/page/{PAGE}/" ' Replace with your actual URL

' Initialize Internet Explorer

Set ie = CreateObject("InternetExplorer.Application")

ie.Visible = False ' Do not display the browser to save resources

' Set up the first row in Excel

i = 1

Cells(1, 1).Value = "URL"

Cells(1, 2).Value = "Article Title"

i = 2 ' Data starts from the second row

' Loop through pages

For page = 1 To 20 ' Adjust the number 20 to match the maximum number of pages

' Create the URL for each page

Dim currentUrl As String

currentUrl = Replace(baseUrl, "{PAGE}", page)

ie.navigate currentUrl

' Wait for the web page to load

Do While ie.readyState <> 4 Or ie.Busy

DoEvents

Loop

' Get the HTML document from the web page

Set doc = ie.document

' Get all links on the page

Set links = doc.getElementsByTagName("a")

' Write URLs and article titles into Excel

For Each link In links

If Not IsEmpty(link.href) And link.href <> "" Then

' Filter only article links (exclude unnecessary links)

If InStr(link.href, "/category/") = 0 And InStr(link.href, "/tag/") = 0 Then

' Extract article title

If link.innerText <> "" Then

Cells(i, 1).Value = link.href ' Write URL into column A

Cells(i, 2).Value = link.innerText ' Write article title into column B

i = i + 1

End If

End If

End If

Next link

' Exit the loop if no links are found on the page

If links.Length = 0 Then Exit For

Next page

' Close the browser

ie.Quit

Set ie = Nothing

MsgBox "Data extraction from all pages completed!"

End Sub

2. Explanation of the Code

Base URL with Pagination: The base URL contains {PAGE}, which is replaced by page numbers during each iteration of the loop.

Page Loop: For page = 1 To 20 iterates through pages 1 to 20. Adjust the range to match the actual number of pages in your category.

Filtering Links: If InStr(link.href, "/category/") = 0 And InStr(link.href, "/tag/") = 0 ensures that only article links are captured, excluding category or tag links.

Extracting Titles: link.innerText retrieves the visible text of the link, which serves as the article title.

Exit Condition: If no links are found on a page (links.Length = 0), the script stops scraping further pages.

3. Step-by-Step Instructions

Step 1: Set up Excel VBA:

Open Excel and press Alt + F11 to access the VBA editor.

Insert a new module (Insert > Module) and paste the above code.

Step 2: Adjust the Base URL:

Replace "http://example.com/category/page/{PAGE}/" with the actual URL of the category or section you want to scrape.

For example: "https://example.com/category/news/page/{PAGE}/".

Step 3: Run the Script:

Press F5 in the VBA editor to run the macro.

URLs and article titles will be written into the Excel worksheet.

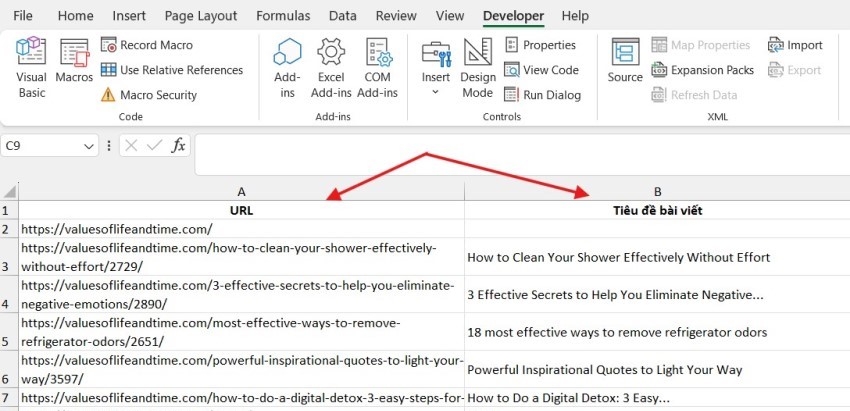

Step 4: Verify the Results:

Column A: Contains the URLs of the articles.

Column B: Contains the titles of the articles.

4. Key Considerations

– Handling JavaScript-Loaded Pages: If the webpage uses JavaScript to dynamically load content, VBA may not work properly. In such cases, consider using Selenium for VBA or another tool like Python’s BeautifulSoup.

– Check Website Policies: Always check the website’s Terms of Service or robots.txt file to ensure that web scraping is allowed.

– Removing Duplicates: Use Excel’s “Remove Duplicates” feature to clean up duplicate URLs or titles if necessary.

– Adjusting the Loop: If the number of pages is unknown, use a higher number (e.g., For page = 1 To 100). The script will stop when it finds an empty page.

This VBA script automates the process of extracting URLs and article titles from a multi-page category on a website. It is highly customizable, allowing you to adjust the base URL and the number of pages. While effective for basic HTML-based websites, this approach may face limitations with JavaScript-heavy pages, which require advanced tools. By following the steps and considerations outlined, you can efficiently scrape data and integrate it into your Excel workflow.